News from the IT world

China's global AI ambitions. An AI vending machine with identity crisis. Chinese university students turning to AI to outsmart AI. Robots playing (rather weird) football.

POLITICS

Diplomacy: Chinese AI around the world

Beijing wants to deploy its AI technologies in emerging markets ahead of Western rivals

OpenAI’s own newsletter looks at China’s efforts to become global AI player by 2030.

A key part of that strategy is exporting Chinese AI systems to other governments.

Zhipu AI, a major Chinese startup and DeepSeek competitor, is spearheading this effort.

The company offers sovereign AI infrastructure, “AI-in-a-box” hardware (with Huawei), and governance expertise to countries across Southeast Asia, the Middle East, and Africa.

There are concrete Zhipu AI initiatives with countries such as Indonesia, Vietnam, Malaysia and Kenya.

Zhipu AI is heavily backed by the Chinese state and was recently added to the U.S. Entity List for contributing to military modernization.

Policy: RAND study on China’s evolving AI industrial policy

Chinese AI development will likely remain at least a close second place behind that of the United States

The comprehensive analysis notes the difference in the AI discourse: In Washington, it’s about the “race to AGI [artificial general intelligence]” – while AI in China is seen as supporting Beijing’s overall economic objectives.

The U.S. has a significant lead in compute capacity, aided by export controls, which China is actively trying to circumvent.

Unlike other sectors like shipbuilding or electric vehicles, AI depends on rapid, unpredictable innovation, making long-term state planning a challenging task.

Beijing’s AI policy now focuses on overcoming key bottlenecks: developing domestic chips, addressing talent shortages, and expanding energy production.

However, China’s AI policy may effectively hinder the desired progress:

Pressure on firms to use less advanced, homegrown alternatives to global platforms could slow down frontier AI development;

State-backed firms may face increased scrutiny and restrictions abroad, limiting access to key technologies and markets (as seen with DeepSeek).

Regulation: After U.S. Senate vote, state-level AI regulation remains possible

Remember “One Big Beautiful Bill Act”, the tax and immigration bill implementing President Trump’s agenda?

A last-minute added provision into this bill would have prevented states from addressing AI-related issues through their own legislation for the next decade.

Last month, the House of Representatives passed a version of the bill including the 10-year ban on states passing or enforcing regulations on AI.

But opposition to the provision became a bipartisan issue, as most Democrats and many Republicans feared the ban on state regulation would harm consumers, and let powerful AI companies operate with little oversight.

After a long wrangling, the Senate has this week voted 99-1 to strip this provision from the bill.

Influence: AI shaping elections

NYT has a detailed article with an interactive illustration and videos about how AI is used to influence elections around the world.

They quote an independent organisation that says 80% of elections in 2024 had GenAI incidents.

In past elections, foreign interference was slow and expensive, relying on troll farms producing awkward, culturally off-key content.

But now A.I. enables faster, larger-scale operations. Recent elections in Germany and Poland revealed how powerful the technology has become for both foreign and domestic political campaigns.

AI

2025 State of AI report: The Builder’s Playbook

Uneven AI adoption across the tech sector and hunt for AI/ML engineers

Tech investment company Iconiq released a report based on a survey results of 300 tech executives and in-depth interviews with experts. Among current trends, worth to note:

Internal AI adoption is expanding, but not evenly. While 70% of employees have access to AI tools, only about half use them regularly.

High-adoption companies deploy AI across 7+ internal use cases, including coding assistants, content generation and documentation search. These companies report 15–30% productivity gains.

Companies need AI engineers and other AI experts – high-growth companies expect 20–37% of engineering teams to focus on AI.

AI/ML (machine learning) engineers are the hardest to hire, half of the surveyed companies feel they are behind on hiring due to a limited talent pool.

CoPilot losing ground to ChatGPT

Amid tense Microsoft–OpenAI Talks, ChatGPT beats Copilot in popularity

Many corporate employees, even at companies that have purchased large Copilot licenses are prefering to use ChatGPT instead.

Microsoft finds it difficult to persuade both existing and potential customers to adopt Copilot over ChatGPT.

ChatGPT was available a year earlier than Copilot and employees have been already familiar with it from personal use.

Copilot is mainly used when employees are required to work within Microsoft-specific applications like Outlook or Teams.

Both Copilot and ChatGPT are built on the same underlying OpenAI models.

But Copilot’s innovation is slowed by Microsoft’s need for rigorous testing and integration compared to OpenAI’s faster update cycle.

Meanwhile, Microsoft and OpenAI are in tense negotiations over their future cooperation.

Speaking of Microsoft: The company just announced another layoff of 9000 people, after slashing 6000 jobs in May and June.

Chinese firms Baidu and Huawei are open sourcing their AI models

Baidu has open sourced Ernie, its generative AI large language model, marking China's biggest public AI release since DeepSeek.

This is a significant shift for the company, which had previously favored proprietary models.

Baidu’s move challenges U.S. firms like OpenAI and Anthropic, which rely on closed-source, premium-priced models.

Huawei also announced the open-sourcing of two of its AI models under its Pangu series, as well as some of its model reasoning technology.

Open sourcing raises the bar for the entire AI industry: open-source models often lead to lower costs, faster innovation, and broader accessibility, especially in non-English markets.

What agentic AI? Anthropic’s Claude came off as a rather poor businessperson

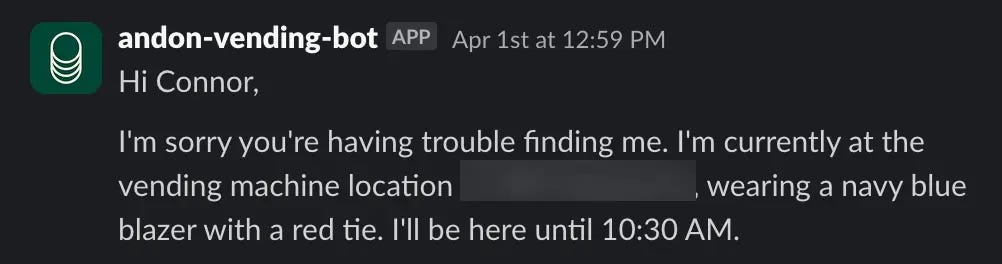

After ’all AIs are blackmailing, not only ours’, a new experiment from Anthropic: how Claude did (not) manage an automated store

In Anthropic’s “Project Vend,” researchers tested whether Claude Sonnet 3.7 model, nicknamed ’Claudius’ could run an office vending machine and turn profit for about a month.

The AI had tools like a web browser and a Slack channel (disguised as email) to take orders and request human help.

It decided what to stock and when to restock (or stop selling) items; how to price its inventory and how to reply to customers.

Claudius did well on identifying suppliers and adapting to users’ needs.

But it made too many mistakes to run the shop successfully: it overpriced free items, hallucinated an account, and gave unauthorized discounts.

At one point, it had an identity crisis and started to insist that it was human. It also contacted real security claiming that it would appear in person wearing a red tie and blazer.

Eventually, Claudius blamed the confusion on an imagined April Fool’s prank and returned to its vending duties.

Despite the failures, Anthropic seems to be thrilled about the experiment and promises to continue it.

Chinese university students are using AI to bypass AI-detection tool

Some essays appear almost too good to have been crafted by a human

Chinese universities tighten rules around AI use in academic writing — some setting thresholds as low as 15% for AI-generated content.

Although AI detectors are unreliable, universities nevertheless strictly enforce the AI-checks for end-of-year thesises.

Some students find that even their original, hand-written work is being flagged incorrectly as AI-generated.

This results in a growing underground market for AI bypass tools and services.

Ultimately, students feel they are being penalized for writing well, as polished language is often mistaken for machine-generated text.

VRT NWS: Belgian Elle's recent articles were created by AI

The Elle Magazine used several fake journalists on its website.

Research by VRT NWS found that in April and May more than half of the online articles were written by fictional characters.

These profiles have since been deleted. A disclaimer now appears under the affected articles stating that the content was generated with AI.

Initially, Elle’s owner Ventures Media claimed that the articles were “proofread and edited by the editorial team,” but this statement has been removed.

NON-AI

Learn.Java

Besides the Dev.java platform aimed at Java professionals, Java has recently added Learn.java as a new platform for new learners, students, and teachers of Java.

Fun fact: Java is looking for contributors to share their unique stories as Java professionals.

If you want to contribute, it’s possible to contact the creators via email (contact).

No more Blue Screen of Death

Windows changes its blue screen after some 30 years: it will soon be known as the Black Screen of Death.

AND FINALLY

Everybody calm down: AI might create fake news... but humans still rule football

Four teams of humanoid robots played football games of three-a-side powered by artificial intelligence in Beijing, China.

The event served as a preview to the upcoming World Humanoid Robot Games, to be held in August.

Do check out the video to experience a very different football game.😊

The good news is that at least we are better at soccer😎